Cross functional

Hed Product, Product Managers, Engineers, Subject Matter experts, Senior Designer & Solution Architects

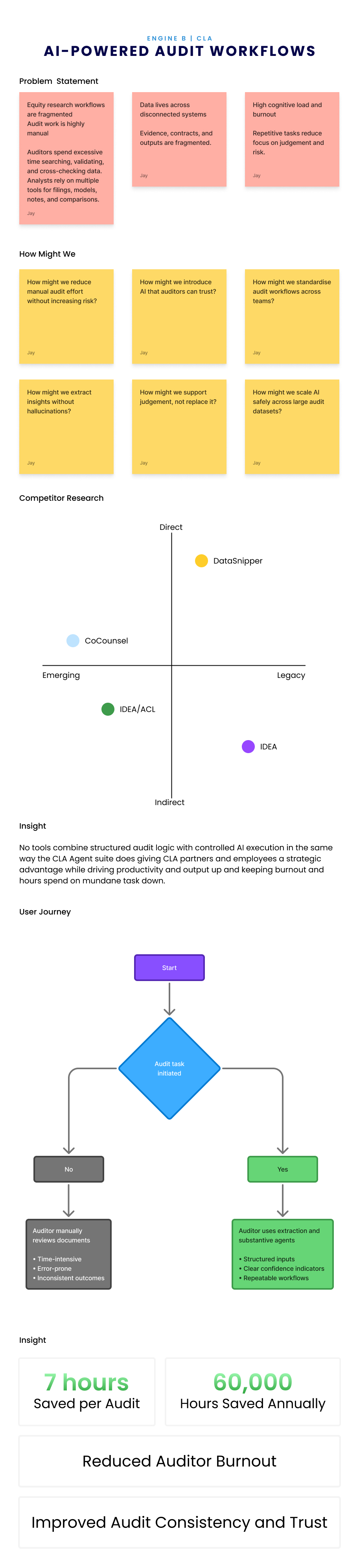

I led the design of an AI-first substantive testing platform inside a global consulting firm, standardising how audits are executed across teams and geographies. Working as the first product designer, I established the product design practice, partnered closely with engineering and audit SMEs, and designed a scalable agent ecosystem that preserved human judgment while improving consistency and audit quality. The platform reduced fragmented workflows to a single global standard and saved an estimated 30 to 40 thousand auditor hours.

Cross functional

Hed Product, Product Managers, Engineers, Subject Matter experts, Senior Designer & Solution Architects

When I joined EngineB, there was no product design function.

No established design practice.

No shared product rituals.

No consistent way for product, engineering, and the business to collaborate.

Shortly after I joined, EngineB was acquired by CLA, a top-20 US consulting firm auditing governments, universities, healthcare systems, and public institutions. With that acquisition, what we were building stopped being experimental. It became infrastructure for a highly regulated, risk-averse industry.

The mandate was ambitious and unforgiving:

Modernise substantive audit testing with AI without compromising trust, compliance, or methodology.

Substantive testing is one of the most critical parts of an audit. A representative subset of financial data is tested to draw conclusions about the whole. The integrity of that process underpins the final financial report.

In practice, the reality was fragmented.

Across CLA, the same substantive test was being run in six to eight different ways depending on team, region, or industry. Sampling was often not truly random. Auditors relied heavily on fragile Excel workbooks stitched together from five or six data sources. Calculations broke. Links failed. Confidence eroded.

This was not just inefficient. It was risky.

Audit conclusions rely on consistency and defensibility. But inconsistency had become systemic. The cost was operational, reputational, and human. Auditors were burning out under the weight of manual work, especially during peak audit seasons.

Because EngineB had never had a product designer, my role quickly expanded beyond interface design.

Alongside the Head of Product, I helped establish the foundations of how product work happened. This included defining collaboration models, setting standards for handovers, aligning design with engineering and QA, and embedding audit SMEs directly into the product process.

My focus became less about individual features and more about systems.

How do we standardise audit execution globally?

How do we introduce AI without removing human accountability?

How do we design for adoption inside an already complex enterprise tech stack?

Design became the connective layer between business goals, technical feasibility, and audit reality.

Early on, we made a deliberate decision not to build isolated tools.

Instead, we designed a platform.

At the foundation sat EngineB’s existing integration engine, a mature system responsible for ingesting and normalising core financial data such as trial balances and general ledgers. This became the bedrock of everything we built.

On top of that, we created a new suite of extraction agents. Many critical audit artefacts, such as invoices, never flowed through the legacy system due to sensitivity or data-retention constraints. These agents used OCR and LLMs to extract large volumes of information quickly and accurately, without retaining data unnecessarily.

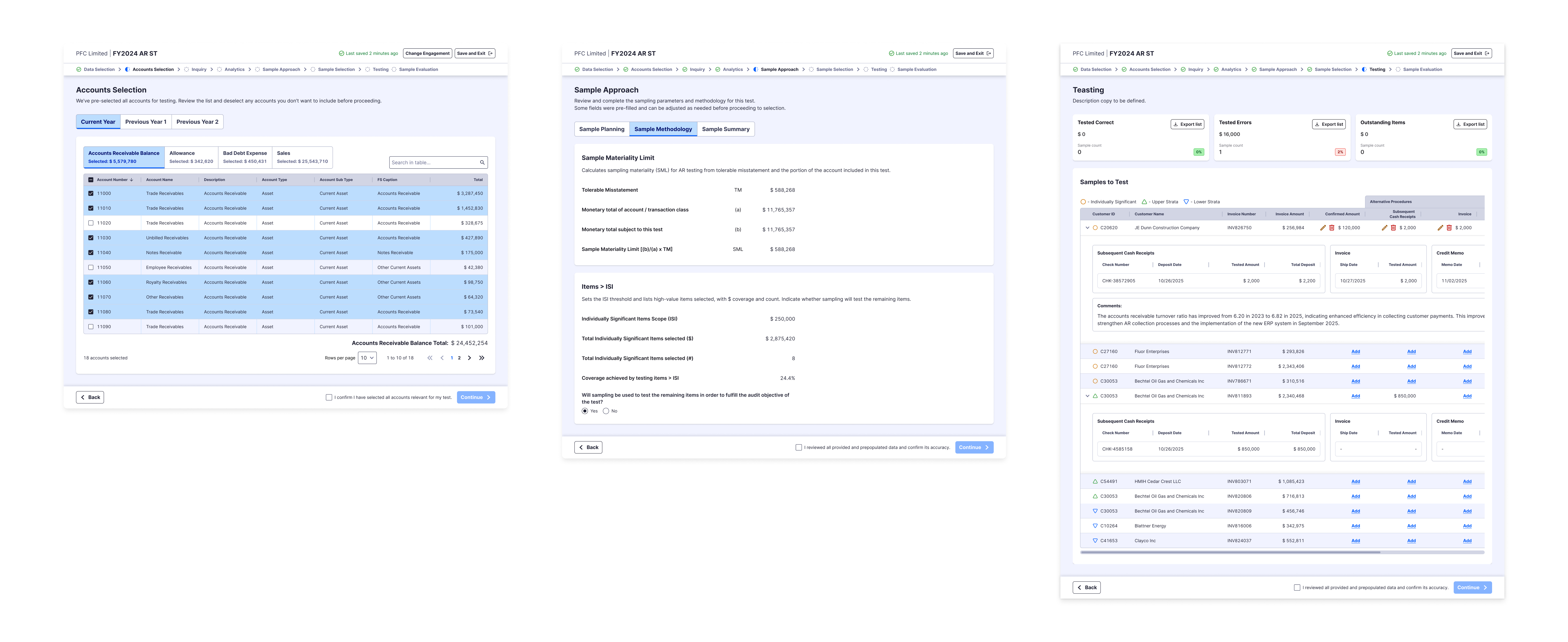

Finally, we designed the Substantive Agent Engine, a reusable and scalable core that powered a growing suite of substantive testing agents. We began with accounts receivable, accounts payable, and investments, initially rolling out to local government and healthcare audits.

This was not about shipping quickly. It was about building something that could scale responsibly.

One of the most consequential decisions in this work centred on data flow and permissions.

My initial vision was for substantive agents to integrate directly with PFX, a core internal system used across CLA. Done well, this would have enabled seamless data flow and role-aware experiences, distinguishing associates, managers, reviewers, and partners at the system level.

Because CLA already operated on Microsoft 365 with global single sign-on, the opportunity was compelling. With the right integration, we could have inherited rich user context automatically and embedded that intelligence directly into the audit workflow.

We explored this path seriously.

However, we made the deliberate decision not to pursue this integration in the first iteration.

CLA’s data landscape had grown organically over many years. Multiple platforms had been built by external vendors on different stacks without a unifying architectural layer. While PFX worked well in isolation, the surrounding infrastructure was too fragmented to safely merge within our constraints.

Pushing ahead would have introduced unacceptable risk.

Extended delivery timelines.

Increased operational complexity.

Failure modes we could not reliably test or contain.

Instead, we prioritised momentum with integrity.

We built a robust, auditable agent ecosystem on top of EngineB’s existing integration engine, accepting limited manual round-tripping between systems. This meant exporting structured data from one environment and importing it into another to complete parts of the workflow.

It was not ideal, and we were explicit about that. But it allowed us to standardise audit execution, deliver value quickly, and validate the platform under real conditions. Crucially, the system was designed so deeper integration could be layered in later once the broader data architecture matured.

Auditors do not want clever interfaces. They want clarity.

We intentionally leaned into familiar patterns, especially tables, so information felt immediately legible. The interface was restrained, predictable, and easy to scan. The goal was not to impress users, but to let them focus on judgment.

We adopted an AI plus human model by design. The system could extract, calculate, and recommend, but it never decided. We introduced deliberate positive friction. Users explicitly confirmed they had reviewed AI outputs before proceeding. Every action was logged. Every step was traceable.

In audit, friction is not a flaw. It is a safeguard.

An auditor began by selecting a client and engagement. Relevant data flowed in automatically. Based on the chosen test, the agent defined required inputs and generated a truly random sample using methodology-approved business logic.

Missing artefacts were gathered, processed in bulk by extraction agents, and fed back into the system. The auditor reviewed the outputs, confirmed their understanding, and finalised the test.

What once took weeks of brittle manual work became structured, repeatable, and defensible, without removing professional judgment.

Nothing shipped on assumption.

We validated continuously through research, SME reviews, low and high-fidelity prototypes, sandbox testing, QA, and live audits. When the first agent was ready, we rolled it out to 100 auditors in production.

Feedback came quickly and often challenged our assumptions.

We triaged relentlessly, separating product issues from engineering constraints, iterating rapidly, and building confidence. From there, we expanded to 1,000 users and then progressively across the organisation, maintaining a constant feedback loop even after global rollout.

The platform did not just save time. It changed behaviour.

We reduced six to eight inconsistent approaches to a single global standard. We saved an estimated 30 to 40 thousand auditor hours, directly addressing burnout during peak audit periods. Methodology teams gained a scalable channel to roll out changes across the organisation. The business gained a credible AI-first foundation for future growth and partner onboarding.

Most importantly, we demonstrated that AI could raise audit quality, not undermine it.

This work reinforced how I approach design at a Staff or Principal level.

Design is an organisational lever.

AI succeeds when accountability is explicit.

Scale comes from structure, not speed.

We did not just modernise a workflow.

We built the infrastructure for how audit can evolve responsibly.